By Kirsten Cosser

The topic of artificial intelligence (AI) has dominated the news cycle in 2023. Central to the debate have been questions about what the technology will mean for the online information ecosystem. Concerns about election tampering, inaccurate health advice and impersonation are rife. And we have started to see examples that warrant these fears.

AI-generated images depicting political events that never happened are already in circulation. Some appear to show an explosion at the Pentagon, the headquarters of the US’s Department of Defense. Others show former US president Donald Trump being arrested and French president Emmanual Macron caught up in a protest.

More innocuous examples have tended to be even more convincing, like images of Pope Francis, head of the Catholic church, wearing a stylish puffer jacket.

Altered images and videos being used to spread false information is nothing new. In some ways, methods for detecting this kind of deception remain the same.

But AI technologies do pose some unique challenges in the fight against falsehood – and they’re getting better fast. Knowing how to spot this content will be a useful skill as we increasingly come into contact with media that might have been tampered with or generated using AI. Here are some things to look out for in images and videos online.

Clues within the image or video itself

Tools that use AI to generate or manipulate content work in different ways, and leave behind different clues in the content. As the technology improves, though, fewer mistakes will be made. The clues discussed below are things that are distinguishable at the time of writing, but may not always be.

Check for a watermark or disclaimer

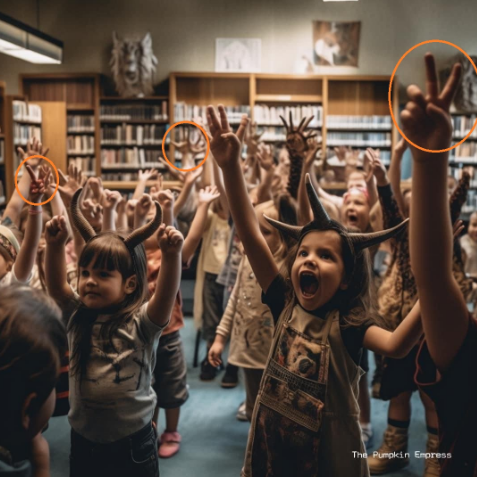

When it comes to AI-generated images, the devil is in the details. In May 2023, a series of images circulated on social media in South Africa showing young children in a library, surrounded by satanic imagery. In the images, the children are in a library, sitting on the floor around a pentagram, the five-pointed star often associated with the occult, being read to by demonic-looking figures or wearing costumes and horns. The scenes were dubbed the “Baphomet Book Club” by some social media users reposting the images.

A number of users, not realising the images were AI-generated, expressed their outrage. This example shows how easy it is to create images that appear realistic at first glance. It also shows how easily social media users can be fooled, even if that wasn’t the intention of the creator of the images. The user who first uploaded the images to the internet, who goes by “The Pumpkin Empress”, included a watermark with this account name in the images, though this was later removed in some of the posts.

Watermarks are designs added to something to show its authenticity or verify where it comes from. Banknotes, for example, include watermarks as a sign of authenticity. Large, visible watermarks are also placed over images on stock photo websites to prevent their use before purchase.

A quick Google search for “The Pumpkin Empress” would have led to Instagram, Twitter and Facebook accounts filled with similar AI-generated images, clearly posted by someone with a penchant for creating demonic-themed AI art, rather than documenting a real phenomenon.

This is the first major clue. Sometimes there are subtle errors in content made by AI tools, but sometimes there’s actual text or some other watermark that can quickly reveal that the images are not real photos. Although these are easy to remove later, and someone who is deliberately trying to fool you is unlikely to put a revealing watermark on an image, it’s always important to look for this as a first step.

For images

Jean le Roux is a research associate at the Atlantic Council’s Digital Forensic Research Lab. He studies technologies like AI and how they relate to disinformation. He told Africa Check that because of the way the algorithms work in current AI-powered image generators, these tools struggle to perfectly recreate certain common features of photos.

One classic example is hands. These tools initially really struggled with hands. Human figures appeared to have warped, missing or more fingers than expected. But this once-telltale sign has swiftly become less reliable as the generators have improved.

(Source: The Pumpkin Empress via Facebook. AI-generated)

The AI tools have the same issue with teeth as with hands, so you should still look carefully at other details of human faces and bodies. Le Roux also suggested looking out for mismatched eyes and any missing, distorted, melting or even doubled facial features or limbs.

But some clues also come down to something just feeling “off” or strange at first glance, similar to the Uncanny Valley effect, where something that looks very humanlike but isn’t completely convincing evokes a feeling of discomfort. According to a guide from fact-checkers at Agence France-Presse (AFP), skin that appears too smooth or polished, or has a platicky sheen, might also be a sign that an image is AI-generated.

(Source: The Pumpkin Empress via Facebook. AI-generated)

Accessories, AFP wrote, can also be red flags. Look out for glasses or jewellery that look as though they’ve melted into a person’s face. AI models often struggle to make accessories symmetrical and may show people wearing mismatched earrings or glasses with oddly shaped frames.

Apart from human figures, looking at the details of text, signs or the background in the image could be useful. Le Roux also noted that these tools struggled where there were repeating patterns, such as buttons on a phone or stitching on material.

But, as AI expert Henry Ajder told news site Deutsche Welle, it might be harder to spot AI-generated landscape scenes than images of human beings. If you are looking at a landscape, your best bet might be to compare the details in the image to photos you can confirm were taken in the place that the image is meant to represent.

(Source: The Pumpkin Empress via Facebook. AI-generated)

In summary, pay special attention to:

- Accessories (earrings, glasses)

- Backgrounds (odd or melting shapes or people)

- Body parts (missing fingers, extra body parts)

- Patterns (repeated small objects like device buttons, patterns on fabric, or a person’s teeth)

- Textures (skin sheen, or textures melting, blending or repeating in an unnatural-looking way)

- Lighting and shadows

For videos

Deepfakes are videos that have been manipulated using tools to combine or generate human faces and bodies. These audiovisual manipulations run on a spectrum from true deepfakes, which closely resemble reality and require specialised tools, to “cheap fakes”, which can be as simple as altering the audio in a video clip to obscure some words in a political speech, and therefore changing its meaning. In these cases, finding the original unedited video can be enough to disprove a false claim.

Although realistic deepfakes might be difficult to detect, especially without specialised skills, there are often clues in cheap fakes that you can look out for, depending on what has been done, whether lip-synching, superimposing a different face onto a body or changing the video speed.

Le Roux told Africa Check that there are often clues in the audio, like the voice or accent sounding “off”. There might also be issues with body movements, especially where cheaper or free software is used. Sometimes still images are used with animated mouths or heads superimposed on them, which means there is a suspicious lack of movement in the rest of the body. A general Uncanny Valley effect is also a clue, which might translate in subtle details like the lighting, skin colour or shadows seeming unnatural.

These clues are all identifiable in a video of South African president Cyril Ramaphosa that circulated on social media in March 2023. In the video, Ramaphosa, who appeared to be addressing the country, outlined a government plan to address the ongoing energy crisis by demolishing the Voortrekker Monument and the Loftus Versfeld rugby stadium, both in the country’s administrative capital city of Pretoria, to make way for new “large-scale diesel generators”.

But the president looked oddly static, with his face and body barely moving as he spoke. His voice and accent also likely sounded strange to most South Africans, and it was crudely overlaid onto mouth movements that didn’t perfectly match up to the words.

- Look at facial details. Pay special attention to mouth and body movement, eyes (are they moving or blinking?) and skin details.

- Listen to the voice carefully. Does it sound robotic or unnatural? Does the accent match other recordings of the person speaking? Is the voice aligned with mouth movements? Does it sound like it’s been slowed down or sped up?

- Inspect the background. Does it match the lighting and general look of the body and face? Does it make sense in the context of the video?

Context is everything

As technology advances and it becomes more difficult to detect fake images or videos, people will need to rely on other tools to separate fact from fiction. Thankfully these involve going back to the basics by looking at how an image or video fits into the world more broadly.

Whether something is a convincing, resource-intensive deepfake, or a quick cheap fake, the basic principles of detecting misinformation through context still apply.

1. Think about the overall message or feeling being conveyed. According to Le Roux, looking at context comes down to asking yourself: “What is this website trying to tell me? What are these social media accounts trying to push?”

Try to identify the emotions the content is trying to evoke. Does it seem like it’s intended to make you angry, outraged or disgusted? This might be a red flag.

2. What are other people saying? It’s often useful to look outside of your immediate reaction to see what other people think. Read the comments to a social media post, as these can give some good clues, but also look at what trustworthy sources are saying. If what is being depicted is an event with a big impact (say, the president demolishing a famous cultural monument to make way for a power station), it will almost certainly have received news coverage by major outlets. If it hasn’t, or if the suspicious content contradicts what reliable news outlets or scientific bodies are saying, that might be a clue that it’s misleading.

3. Google or Bing or DuckDuckGo. Do a search! If you have a sneaking suspicion something might not be entirely accurate (or even if you don’t), see what other information is available online about it. If a video depicts an event, say Trump being arrested, type this into a search engine.

If you think a video might have been edited in some way to change its meaning, see if you can find the original video. This might be possible by searching for the name of the event or some words relating to it (for example, “Ramaphosa address to the nation April 2023”).

You might find a reverse search works better. Though generally used for images, there are easy ways to reverse search videos. Tools like this one from InVid do this for you, using screenshots from the video.

4. Compare with real images or videos. Just as you would use visual clues to work out where a photo was taken, try to identify details that you can compare with accurate information. If an image is meant to show a real person or place, then details like facial features, haircuts, landscapes and other details should match real life.

Source JAMLAB